Network Adapter Cards

The role of the network Adapter cards it to:

· Prepare data from the computer for the network cable

· Send the data to another computer

· Control the flow of data between the computer and the cabling system

NIC's contain hardware and firmware (software routines in ROM) programming that implements the

· Logical Link Control and

· Media Access Control

Functions of the Data Link layer of the OSI

Preparing Data

· Data moves along paths in the computer called a BUS - can be 8, 16, 32 bits wide.

· On network cable, data must travel in a single bit stream in what's called a serial transmission (b/c on bit follows the next).

· The transceiver is the component responsible for translating parallel (8, 16, 32-bit wide) into a 1 bit wide serial path.

· A unique network address or MAC address is coded into chips in the card

· card uses DMA (Direct Memory Access) where the computer assigns memory space to the NIC

if the card can't move data fast enough, the card's buffer RAM holds it temporarily during transmission or reception of data

Sending and Controlling Data

The NICs of the two computers exchanging data agree on the following:

1. Maximum size of the groups of data being sent

2. The amount of data to be sent before confirmation

3. The time intervals between send data chunks

4. The amount of time to wait before confirmation is sent

5. How much data each card can hold before it overflows

6. The speed of the data transmission

Network Card Configuration

· IRQ: a unique setting that requests service from the processor.

IRQ #---- Common Use---- I/O Address

IRQ 1 - Keyboard

IRQ 2(9)- Video Card

IRQ 3 - Com2, Com4 2F0 to 2FF

IRQ 4 - Com1, Com3 3F0 to 3FF

IRQ 5 - Available (Normally LPT2 or sound card )

IRQ 6 - Floppy Disk Controller

IRQ 7 - Parallel Port (LPT1)

IRQ 8 - Real-time clock

IRQ 9 - Redirected IRQ2 370 - 37F

IRQ 10 - Available (maybe primary SCSI controller)

IRQ 11 - Available (maybe secondary SCSI controller)

IRQ 12 - PS/2 Mouse

IRQ 13 - Math Coprocessor

IRQ 14 -Primary Hard Disk Controller

IRQ 15 - Available (maybe secondary hard disk controller)

Base I/O port: Channel between CPU and hardware

Specifies a channel through which information flows between the computer's adapter card and the CPU. Ex. 300 to 30F.

Each hardware device must have a different base I/O port

Base Memory address: Memory in RAM used for buffer area

Identifies a location in the computer's RAM to act as a buffer area to store incoming and outgoing data frames. Ex. D8000 is the base memory address for the NIC.

Each device needs its own unique address.

some cards allow you to specify the size of the buffer ( 16 or 32 k, for example)

Transceiver:

Sometimes selected as on-board or external. External usually will use the AUI/DIX connector: Thicknet, for example

Use jumpers on the card to select which to use

Data Bus Architecture

The NIC must

· match the computer's internal bus architecture and

· have the right cable connector for the cable being used

· ISA (Industry Standard Architecture): original 8-bit and later 16-bit bus of the IBM-PC.

· EISA (Extended Industry Standard Architecture): Introduced by consortium of manufacturers and offers a 32-bit data path.

· Micro-Channel Architecture (MCA): Introduced by IBM in its PS/2 line. Functions as either 16 or 32 bit.

· PCI (Peripheral Component Interconnect): 32-bit bus used by Pentium and Apple Power-PC's. Employs plug and play.

Improving Network Card Performance

Direct Memory Access (DMA):

Data is moved directly from the network adapter card's buffer to computer memory.

Shared Adapter Memory:

Network adapter card contains memory which is shared with the computer.

The computer identifies RAM on the card as if it were actually installed on the computer

Shared System Memory:

The network adapter selects a portion of the computer's memory for its use.

MOST common

Bus Mastering:

The adapter card takes temporary control of the computer's bus, freeing the CPU for other tasks.

moves data directly to the computer's system memory

Available on EISA and MCA

can improve network performance by 20% to 70%

RAM buffering:

Ram on the adapter card acts as a buffer that holds data until the CPU can process it.

this keeps the card from being a bottleneck

·On-board microprocessor:

enables the adapter card to process its own data without the need of the CPU

Wireless Adapter Cards

· Used to create an all-wireless LAN

· Add wireless stations to a cabled LAN

· uses a wireless concentrator, which acts as a transceiver to send and receive signals

Remote-Boot PROMS (Programmable Read Only Memory)

· Enables diskless workstations to boot and connect to a network.

· Used where security is important.

Tuesday, December 30, 2008

Network Adapter Cards

Posted by mathy at 12:28 AM 0 comments

Saturday, December 27, 2008

Putting Data on the Cable

Access Methods

The 4 major methods

Carrier Sense Multiple Access Methods

1. with collision detection (CSMA/CD)

2. with collision avoidance (CSMA/CA)

Token passing that allows only a singe opportunity to send data

A Demand Priority method

Carrier Sense Multiple Access with Collision Detection. (CSMA/CD)

1. Computer senses that the cable is free.

2. Data is sent.

3. If data is on the cable, no other computer can transmit until the cable is free again.

4. If a collision occurs, the computers wait a random period of time and retransmit.

o Known as a contention method because computers compete for the opportunity to send data. (Database apps cause more traffic than other apps)

o This can be a slow method

o More computers cause the network traffic to increase and performance to degrade.

o The ability to "listen" extends to a 2,500 meter cable length => segments can't sense signals beyond that distance.

Carrier Sense Multiple Access with Collision Avoidance (CSMA/CA)

in CSMA/CA, the computer actually broadcasts a warning packet before it begins transmitting on the wire. This packet eliminates almost all collisions on the network because each computer on the network does not attempt to broadcast when another computer sends the warning packet.

All other computers wait until the data is sent.

The major drawback of trying to avoid network collisions is that the network traffic is high due to the broadcasting of the intent to send a message.

Token Passing

Special packet is passed from computer to computer.

A computer that wants to transmit must wait for a free token.

Computer takes control of the token and transmits data. Only this computer is allowed to transmit; others must wait for control of the token.

Receiving computer strips the data from the token and sends an acknowledgment.

Original sending computer receives the acknowledgment and sends the token on.

the token comes from the Nearest Active Upstream Neighbor and when the computer is finished, it goes to the Nearest Active Downstream Neighbor

uses "beaconing" to detect faults => this method is fault tolerant

NO contention => equal access to all computers on the network

NO collisions

Demand Priority

0. 100 Mbps standard called 100VG-AnyLAN. "Hub- based".

1. Repeaters manage network access by performing cyclical searches for requests to send from all nodes on the network. The repeater or HUB is responsible for noting all addresses, links and end nodes and verifying if they are all functioning. An "end node" can be a computer, bridge, router or switch.

2. Certain types of data are given priority if data reaches the repeater simultaneously. If two have the same priority, BOTH are serviced by alternating between the two.

Advantages over CSMA/CD

1. Computers Uses four pairs of wires which can send and receive simultaneously.

2. Transmissions are through the HUB and are not broadcast to all other computers on the network.

3. There is only communication between the sending computer, the hub and the destination computer.

Other methods

Appletalk

The cabling system for an AppleTalk network is called LocalTalk.

LocalTalk uses CSMA/CA

AppleTalk has a dynamic network addressing scheme.

During bootup, the AppleTalk card broadcasts a random number on the network as its card address. If no other computer has claimed that address, the broadcasting computer configures the address as its own. If there is a conflict with another computer, the computer will try to use different IP combinations until it finds a working configuration.

ARC Net

ARC Net uses a token passing method in a logical ring similar to Token Ring networks.

However, the computers in an ARC Net network do not have to be connected in any particular fashion.

ARC Net can utilize a star, bus, or star bus topology.

Data transmissions are broadcast throughout the entire network, which is similar to Ethernet.

However, a token is used to allow computers to speak in turn.

The token is not passed in a logical ring order because ARCNet does not use the ring topology; instead the token is passed to the next highest numerical station

Use DIP switches to set the number (the Station Identifier) of the workstations, which you want to be beside each other so the token is passed to the next computer efficiently.

ARC Net isn't popular anymore => ARC Net speeds are a mere 2.5 Mbps.

Most important ARC Net facts for you to know:

ARC Net uses RG-62 (93 ohms) cabling;

it can be wired as a star, bus, or star bus; and

it uses a logical-ring media access method.

Summary Chart

Type of Communication

CSMA/CD - Broadcast-based

CSMA/CA - Broadcast-based

Token Passing-Token-based

Demand Priority-Hub-based

Type of Access Method

CSMA/CD - Contention

CSMA/CA -Contention

Token Passing-Non-Contention

Demand Priority-Contention

Type of Network

CSMA/CD -Ethernet

CSMA/CA -Local Talk

Token Passing-Token Ring ARCnet

Demand Priority-100VG-AnyLAN

Posted by mathy at 12:19 AM 0 comments

Thursday, December 25, 2008

Protocols

Protocols are rules and procedures for communication.

How Protocols Work

The Sending Computer

Breaks data into packets.

Adds addressing information to the packet

Prepares the data for transmission.

The Receiving Computer (same steps in reverse)

Takes the packet off the cable.

Strips the data from the packet.

Copies the data to a buffer for reassembly.

Passes the reassembled data to the application.

Protocol Stacks (or Suites)

A combination of protocols, each layer performing a function of the communication process.

Ensure that data is prepared, transferred, received and acted upon.

The Binding Process

Allows more than one protocol to function on a single network adapter card. (e.g. both TCP/IP and IPX/SPX can be bound to the came card

Binding order dictates which protocol the operating systems uses first.

binding also happens with the Operating System architecture: for example, TCP/IP may be bound to the NetBIOS session layer above and network card driver below it. The NIC device driver is in turn bound to the NIC.

Standard Stacks

ISO/OSI

IBM SNA (Systems Network Architecture)

Digital DECnet

Novell NetWare

Apple AppleTalk

TCP/IP

Protocol types map roughly to the OSI Model into three layers:

Application Level Service Users

Application Layer Presentation Layer Session Layer

Transport Services

Transport Layer

Network Services

Network Layer Data Link Layer Physical Layer

Application Protocols

Work at the upper layer of the OSI model and provide application to application interaction and data exchange.

Examples:

APPC-IBM's peer to peer SNA protocol used on AS400's

FTAM: an OSI file access protocol.

X.400: international e-mail transmissions.

X.500: file and directory services across systems.

SMTP: Internet e-mail.

FTP: Internet file transfer

SNMP: Internet network management protocol.

Telnet: Internet protocol for logging on to remote hosts.

Microsoft SMB: client shells and redirectors.

NCP: Novell client shells or redirectors.

AppleTalk and AppleShare: Apple's protocol suite.

AFP: Apple's protocol for remote file access.

DAP (data access protocol): DECnet file access protocol.

Transport Protocols

These protocols provide communication sessions between computers and ensure data is moved reliably between computers.

Examples:

TCP (transmission control protocol): internet protocol for guaranteed delivery of sequenced data.

SPX (sequenced packet exchange): Novell protocol suite.

NWLink: Microsoft implementation of IPX/SPX.

NetBEUI: establishes communications sessions between computers and provides the underlying data transport services.

ATP, NBP: Apple's communication session and transport protocols.

Network Protocols

These provide link services

They also

handle

o addressing and routing,

o error checking and

o retransmission requests.

Define rules for Ethernet or Token Ring.

Examples:

IP (Internet Protocol): packet forwarding and routing.

IPX: (Internetwork Packet Exchange): Novell's protocol for packet forwarding and routing.

NWLink: Microsoft implementation of IPX/SPX.

NetBEUI: Transport for NetBIOS sessions and applications.

DDP (datagram delivery protocol): An AppleTalk data transport protocol.

Posted by mathy at 2:26 PM 0 comments

Tuesday, December 23, 2008

A Network Functions

The OSI Model

International Standards Organization (ISO) specifications for network architecture.

Called the Open Systems Interconnect or OSI model.

Seven layered model, higher layers have more complex tasks.

Each layer provides services for the next higher layer.

Each layer communicates logically with its associated layer on the other computer.

Packets are sent from one layer to another in the order of the layers, from top to bottom on the sending computer and then in reverse order on the receiving computer.

OSI Layers

Application

Presentation

Session

Transport

Network

Data Link

Physical

Application Layer

Serves as a window for applications to access network services.

Handles general network access, flow control and error recovery.

Presentation Layer

Determines the format used to exchange data among the networked computers.

Translates data from a format from the Application layer into an intermediate format.

Responsible for protocol conversion, data translation, data encryption, data compression, character conversion, and graphics expansion.

Redirector operates at this level.

Session Layer

Allows two applications running on different computers to establish use and end a connection called a Session.

Performs name recognition and security.

Provides synchronization by placing checkpoints in the data stream.

Implements dialog control between communicating processes.

Transport Layer

Responsible for packet creation.

Provides an additional connection level beneath the Session layer.

Ensures that packets are delivered error free, in sequence with no losses or duplications.

Unpacks, reassembles and sends receipt of messages at the receiving end.

Provides flow control, error handling, and solves transmission problems.

Network Layer

Responsible for addressing messages and translating logical addresses and names into physical addresses.

Determines the route from the source to the destination computer.

Manages traffic such as packet switching, routing and controlling the congestion of data.

Data Link Layer

Sends data frames from the Network layer to the Physical layer.

Packages raw bits into frames for the Network layer at the receiving end.

Responsible for providing error free transmission of frames through the Physical layer.

Physical Layer

Transmits the unstructured raw bit stream over a physical medium.

Relates the electrical, optical mechanical and functional interfaces to the cable.

Defines how the cable is attached to the network adapter card.

Defines data encoding and bit synchronization.

OSI Model Enhancements

The bottom two layers - Data Link and Physical - define how multiple computers can simultaneously use the network without interfering with each other.

Divides the Data-link layer in to the Logical Link Control and Media Access Control sub layers.

Logical Link Control

manages error and flow control and

Defines logical interface points called Service Access Points (Sap’s). These SAP's are used to transfer information to upper layers

Media Access Control

communicates directly with the network adapter card and

Is responsible for delivering error-free data between two computers.

Categories

802.3

802.4

802.5 and

802.12 define standards for both this sub layer and the Physical layer

Drivers

a device driver is software that tells the computer how to drive or work with the device so that the device performs the job it's supposed to.

Drivers are called

Network Drivers,

MAC drivers,

NIC drivers.

Provide communication between a network adapter card and the redirector in the computer.

Resides in the Media Access Control sublayer of the Data Link layer. Therefore, the NIC driver ensures direct communication between the computer and the NIC

the Media Access Control driver is another name for the network card device driver

When installing a driver, you need to know these things

IRQ

I/O Port Address

Memory Mapped (Base Memory Address)

Transceiver Type

Packets

Data is broken down into smaller more manageable pieces called packets.

Special control information is added in order to:

disassemble packets

reassemble packets

check for errors

Types of data sent includes

Can contain information such as messages or files.

Computer control data and commands and requests.

Session control codes such as error correction and retransmission requests.

Original block of data is converted to a packet at the Transport layer.

Packet Components

Header

Alert signal to indicate packet is being transmitted

Source address.

Destination address.

Clock synchronization information.

Data

Contains actual data being sent.

Varies from 512 to 4096 bytes (4K), depending on the network

Trailer

Content varies by protocol.

Usually contains a CRC.

Packet Creation

Look at the example on pp. 201 - 204

Begins at the Application layer where data is generated.

Each layer subsequently adds information to the packet; the corresponding layer on the receiving machine reads the information.

Transport layer breaks the data into packets and adds sequencing information needed to reassemble data at the other end => the structure of the packets is defined by the common protocol being used between the two computers.

Data is passed through the Physical layer to the cable.

Packet Addressing

every NIC sees all packets sent on its cable segment but only interrupts the computer if the packet address matches the computer's address

a broadcast type address gets attention of all computers on the network

Posted by mathy at 12:16 AM 0 comments

Monday, December 22, 2008

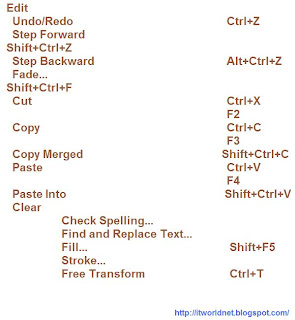

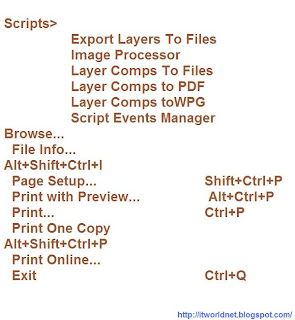

Adobe Photoshop Shortcuts

Transform>

Again Shift+Ctrl+T

Scale

Rotate

Skew

Distort

Perspective

Warp

Rotate 180°

Rotate 90° CW

Rotate 90° CCW

Flip Horizontal

Flip Vertical

Define Brush Preset...

Define Pattern...

Define Custom Shape...

Purge>

Undo

Clipboard

Histories

All

Adobe PDF Presets...

Preset Manager...

Color Settings... Shift+Ctrl+K

Assign Profile...

Convert to Profile...

Keyboard Shortcuts... Alt+Shift+Ctrl+K

Menus... Alt+Shift+Ctrl+M

Preferences>

General... Ctrl+K

File Handling...

Display & Cursors...

Transparency & Gamut...

Units & Rulers...

Guides, Grid & Slices...

Plug-Ins & Scratch Disks...

Memory & Image Cache...

Type...

Posted by mathy at 12:20 AM 0 comments

Sunday, December 21, 2008

Saturday, December 20, 2008

Thursday, December 18, 2008

Tuesday, December 16, 2008

Continuous Professional Development (CPD) Programme

The pace of change in the application of information technology is so rapid that it is easy for the IS professional, focussed on a specific assignment, to fall behind recent developments. Continuous Professional Development is about keeping vital skills current – and it's not just confined to skills in information technology. Employers need to know that staff are keeping themselves informed on the latest developments, and are broadening their skills base as their job specification changes. Practitioners need a simple and practical method of monitoring the CPD work that they do.

Continuing Professional Development (CPD) is about continuously updating professional knowledge, personal skills and competencies. Even in retirement many people may make a contribution to their profession and still have personal learning goals.

Principles and Benefits of CPD

Principles:

The Professional development needs to be carefully planned, properly evaluated and carefully recorded for it to provide maximum benefit.

The provision of world-class management development resources can improve skill levels, increase motivation and aid recruitment and retention of key talent.

The professional should always be actively seeking to improve performance

Planning, Evaluating, Recording and Development are continuous

Development is a personal matter and should be owned and managed by the individual with the support of the employer

Learning outcomes should relate to the overall career plan of the individual and recognize current organizational needs where possible

Investment in training and development should be regarded as valuable as any other form of investment

Any development plan must start with the individual's current learning needs, take into account previous development, fit into their overall life and career plan and, where relevant, meet current organisational needs

Development goals should be clearly defined and accompanied by specific measures of what will constitute a successful outcome

Members should regularly assess their achievement against these measures, either as self-assessment or through peer assessment or with the help of a mentor

Investment in training, development and learning should be regarded as a fundamental principle of enhancing professional and commercial success

Enabling personal, professional and organisational growth

Enhancing patient care through clinical effectiveness and multiprofessional working

Adopting the principles of adult learning, featuring reflectiveness, responsiveness and personal review

Fairness in terms of access to, and time for, learning

Being supportive to colleagues in terms of a flexible approach to their career development

Mutual commitment in terms of sharing responsibility and agreement on resources

Being purposeful in terms of linking educational planning to clear organisational and individual goals

Supporting individuals through personal transitions and transformations in significant features of the service they deliver

Assuring educational effectiveness in terms of evaluating the quality and outcome for educational activities

Assuring cost effectiveness in terms of how learning opportunities are provided

Benefits:

Employers have a better skilled and more efficient work force

Informed employers attract high calibre staff and keep them

Good CPD policies can provide the most cost effective means of providing training, particularly if

carried out in partnership with institutions

Individuals will have the skills to react to a changing profession more readily

Staff will be more adaptable aiding diversification opportunities

CPD support from the employer as far as the employee's are concerned improves motivation and retention

Although the benefits of CPD are primarily felt by the individual, there are benefits to be gained by employers and the Society of Archivists as indicated by the table below.

Benefits to the Individual

Increases self esteem as you look back on achievements

Financial reward-CPD could support a claim for promotion

Encourages analytical thinking; about your job, the tasks that you do and how you do them thereby increasing performance

Provides the opportunity to enrich and develop your existing job

Aids career development; you can plan to learn skills which will equip you to move your career in a direction which interests you

Reflective practice encourages you to assess alternative approaches to tasks and gives you encouragement to thing creatively

Reflective practice can help you to feel in control of your work circumstances and might help to relieve stress

A written record of your CPD allows you to demonstrate your skills in a very concrete way

CPD can help you recognize the way in which skills learnt in other areas of your life could be applied in the workplace

Reflective practice is a safe environment for thinking about and assessing your mistakes, problems and perceived failures. It allows you to ask “what did I learn from this?” and think about what you would do differently in the future

A written record of CPD can help you to define and make clear in your mind your mind your achievements and the progress you have made

Posted by mathy at 12:32 PM 0 comments

Monday, December 15, 2008

Computer Assisted Software Engineering CASE

Introduction

Computer-assisted software engineering (CASE) tools are a set of programs and aids that assist analysts, software engineers, and programmers during all phases of the system development life cycle (The stages in the system development life cycle are: Preliminary Investigation, Analysis, Design, Implementation, and Installation). The implementation of a new system requires a lot of tasks to be organized and completed correctly and efficiently. CASE tools were developed to automate these process and to ease the task of coordinating the events that need to be performed in the system development life cycle. CASE tools can be divided into two main groups - those that deal with the first three parts of the system development life cycle (preliminary investigation, analysis, and design) are referred to as Front-End CASE tools or Upper CASE tools, and those that deal mainly with the Implementation and Installation are referred to as Back-End CASE tools or Lower CASE tools.

The major reason for the development of CASE tools was to increase the speed of the development of systems. By doing so, companies were able to develop systems without facing the problem of having business needs change before the system could be finished being developed. Quicker installation also allowed the companies to complte more effectively using its newly developed system that matched its current business needs. In a highly competitive market, staying on the leading edge can make the difference between success and failure.

CASE tools also allowed analysts to allocate more time to the analysis and design stages of development and less time coding and testing. Previous methods saw only 35% of the time being spent of analysis and design and 65% of the time being used to develop code and testing. CASE tools allowed analysts to use as much as 85% of the time in the analysis and design stages of the development. This resulted in systems that more closely mirrored the requirement from the users and allowed for more efficient and effective systems to be developed.

By using a set of CASE tools, information generated from one tool can be passed to other tools which, in turn, will use the information to complete its task, and then pass the new information back to the system to be used by other tools. This allows for important information to be passed very efficiently and effectively between many planning tools with practically no resistance. When using the old methods, incorrect information could very easily be passed between designers or could simply be lost in the shuffle of papers.

History of Case Tools

CASE tools began with the simple word processor which was used for creating and manipulating documentation. The seventies saw the introduction of graphical techniques and structured data flow diagrams. Up until this point, design and specifications in pictorial form had been extremely complex and time consuming to change. The introduction of CASE tools to aid this process allowed diagrams to be easily created and modified, improving the quality of software designs. Data dictionaries, a very useful document that holds the details of each data type and processes within a system, are the direct result of the arrival of data flow design and structural analysis made possible through the improvements of CASE tools. Early graphics packages were soon replaced by specialist’s packages which enabled editing, updating and printing multiple versions of a design. Eventually, graphic tools integrated with data dictionary databases to produce powerful design and development tools that could hold complete design cycle documents. As a final step, error checking and test case generators were included to validate software design. All these processes can know is integrated into a single CASE tool that supports all of the development cycle.

Early 80's Computer aided documentation computer aided diagramming analysis and design tools

Mid 80's Automated design analysis and checking automated system information repositories

Late 80's Automated code generation from design specification linking design automation

Early 90's Intelligent methodology driver habitable user interface reusability as a development methodology

Advantages of CASE Tools

Current trends are showing a significant decrease in the cost of hardware with a corresponding increase in the cost of computer software. This reflects the labor intensive nature of the software. Developing effective software packages takes the work of many people and can take years to complete. Furthermore, small errors in the logic of the programs can have huge consequences for the user. CASE tools are an important part of resolving the problems of application development and maintenance. CASE tools significantly alter the time taken by each phase and the distribution of cost with in the software life cycle. Software engineers are now placing greater emphasis on analysis and design. Much of the code can now be generated automatically with the development of detailed specifications. Improvements in both these areas made possible through the use of CASE tools are showing dramatic reductions in maintenance costs. The power of CASE tools lies in their central repository which contains descriptions of all the central components of the system. These descriptions are used at all stages of the cycle; creation of input/output designs, automatic code generation, etc. Later tasks continue to add to and build upon this repository so that by the conclusion of the project it contains a complete description of the entire system. This is a powerful device which was not feasible before the introduction of CASE tools.

More specifically CASE tools:

Ensure consistency, completeness and conformance to standards

Encourage an interactive, workstation environment

Speeds up development process

Allows precision to be replicated

Reduces costs, especially in maintenance

Increases productivity

Makes structured techniques practical

Selection of a CASE Tool

With thousands of tools available the decision of which one will best fit your needs is not an easy one. The failure or success of the tool is relative to your expectations. Therefore a clear understanding of the specifications and expectations of the CASE tool are of utmost necessity before beginning your search. There are three common points of failure; the selection process itself, the pre-requisites of the tool, your business. As previously mentioned the evaluation and selection of a CASE tool is a major project which should not be taken lightly. Time and resources need to be allocated to identifying the criteria on which the selection is to be based. Next, examine if these expectations are reasonable. Make sure you have a clear understanding of the tools purpose. There must be a common vision of the systems development environment in which the tools will be used. Finally, know your organization and its needs. Identify the infra structure and in particular, the level of discipline in the information technology department. Is your selection of a CASE tool compatible with the personalities, and expertise of the individuals who will be using it? If these three areas are taken into consideration the tools is sure to be a success and offer all the benefits outlined above to your development project.

Upper (Front-End) CASE Tools

During the initial stages of the system development, analysts are required to determine system requirements, and analyze this information to design the most effective system possible. To complete this task, an analyst will use data flow diagrams, data dictionaries, process specifications, documentation and structure charts. When completing these tasks manually, it becomes very tedious to have to redraw the diagrams each time a change is made to the system. Computerized CASE tools allows for these types of changes to be made very quickly and accurately. However, using the old methods, a bigger problem arises when changes need to be made to the system - a change to one diagram may require many changes to occur throughout all the documentation. For a very large system, it is very easy to forget to make the changes in all documentation, leading to an erroneous representation of the system which could lead to problems during the implementation phase. By using CASE tool's analysis feature, information shared throughout the flowcharts and documentation can be checked against each other to ensure that they match.

CASE tools are also a very helpful tool to use during the design phase of the system development. CASE provides tools to help develop prototype screens, reports and interfaces. These prototypes can then be check and approved by the users and management very quickly. This avoids the problem of having to redesign the interfaces during the implementation phase, that users do not like or do not complete the task they are suppose to handle.

Lower (Back-End) CASE Tools

Lower CASE tools are most often used to help with the generation of the program code. Forth generation programming languages and code generators measurably reduce the time and cost needed to produce the code necessary to run the system. Code generators also produce a high quality of code that is easy to maintain and that is portable (i.e. is easily transferable to other hardware platforms).

Forth generation program code is also much easier to test. Since forth generation code tends to focus on the logic of the program, there are much fewer lines of code for the programmer to examine and test. Fewer lines also aids in the maintenance of the program since fewer lines need to be examined, and only the higher level forth generation code will need to be changed, not the lower level third generation code.

Code generators also have the feature that they are able to interact with the upper CASE tools. Information that was stored from the upper CASE tools can be accessed using the code generators to aid in the development of the code. Code generators also allow for specialized code to be inserted into the generated program code. This allows special features to be designed and implemented into the program.

Benefits and drawback of CASE tool:

Benefits of CASE tool:

Produce systems with a longer effective operational life

Produce systems that more closely meet user needs and requirements

Produce systems with excellent documentation

Produce systems that need less systems support

Produce more flexible systems

Drawback of CASE tool:

Produce initial systems that are more expensive to build and maintain

Require more extensive and accurate definition of ser needs and requirements

May be difficult to customize

Require training of maintenance staff

May be difficult to use with existing systems

Posted by mathy at 11:42 AM 0 comments

Sunday, December 14, 2008

Prototyping model and Object oriented model

The prototyping model is a software development process that begins with requirements collection, followed by prototyping and user evaluation

Often the end users may not be able to provide a complete set of application objectives, detailed input, processing, or output requirements in the initial stage.

After the user evaluation, another prototype will be built based on feedback from users, and again the cycle returns to customer evaluation.

The cycle starts by listening to the user, followed by building or revising a mock-up, and letting the user test the mock-up, then back. There is now a new generation of tools called Application Simulation Software which help quickly simulate application before their development.

Advantages of prototyping

May provide the proof of concept necessary to attract funding

Early visibility of the prototype gives users an idea of what the final system looks like

Encourages active participation among users and producer

Enables a higher output for user

Cost effective (Development costs reduced)

Increases system development speed

Assists to identify any problems with the efficacy of earlier design, requirements analysis and coding activities

Helps to refine the potential risks associated with the delivery of the system being developed

Disadvantages of prototyping

User’s expectation on prototype may be above its performance

Possibility of causing systems to be left unfinished

Possibility of implementing systems before they are ready

Producer might produce a system inadequate for overall organization needs

User can get too involved where as the program can not be to a high standard

Structure of system can be damaged since many changes could be made

Producer might get too attached to it (might cause legal involvement)

Not suitable for large applications

Electronics prototyping

In electronics, prototyping means building an actual circuit to a theoretical design to verify that it works, and to provide a physical platform for debugging it if it does not.

The prototype is often constructed using techniques such as wire wrap or using Vero board or breadboard, that create an electrically correct circuit, but one that is not physically identical to the final product

mass produce custom printed circuit boards than these other kinds of prototype boards. This is for the same reasons that writing a poem is fastest by hand for one or two, but faster by printing press if you need several thousand copies.

[Edit] Rapid Electronics prototyping

The proliferation of quick-turn pub fob companies and quick-turn pub assembly houses has enabled the concepts of rapid prototyping to be applied to electronic circuit design. It is now possible, even with the smallest passive components and largest fine-pitch packages, to have boards fobbed and parts assembled in a matter of day

Strength of object oriented model.

Object-oriented models have rapidly become the model of choice for programming most new computer applications. Since most application programs need to deal with persistent data, adding persistence to objects is essential to making object-oriented applications useful in practice. There are three classes of solutions for implementing persistence in object-oriented applications: the gateway-based object persistence approach, which involves adding object-oriented programming access to persistent data stored using traditional non-object-oriented data stores, the object-relational database management system (DBMS) approach, which involves enhancing the extremely popular relational data model by adding object-oriented modeling features, and the object-oriented DBMS approach (also called the persistent programming language approach), which involves adding persistence support to objects in an object-oriented programming language. In this paper, we describe the major characteristics and requirements of object-oriented applications and how they may affect the choice of a system and method for making objects persistent in that application. We discuss the user and programming interfaces provided by various products and tools for object-oriented applications that create and manipulate persistent objects. In addition, we describe the pros and cons of choosing a particular mechanism for making objects persistent, including implementation requirements and limitations imposed by each of the three approaches to object persistence previously mentioned. Given that several object-oriented applications might need to share the same data, we describe how such applications can interoperate with each other. Finally, we describe the problems and

solutions of how object-oriented applications can coexist with non-object-oriented (legacy) applications that access the same data.

Strength and weakness of object oriented model

Benchmarks between Odom’s and RDBMS have shown that ODBMS can be clearly superior for certain kinds of tasks. The main reason for this is that many operations are performed using navigational rather than declarative interfaces, and

navigational access to data is usually implemented very efficiently by following pointers.[3]

Critics of Navigational Database-based technologies like ODBMS suggest that pointer-based techniques are optimized for very specific "search routes" or viewpoints. However, for general-purpose queries on the same information, pointer-based techniques will tend to be slower and more difficult to formulate than relational. Thus, navigation appears to simplify specific known uses at the expense of general, unforeseen, and varied future uses. (However, it may be possible to apply generic reordering and optimizations of pointer routes in some cases).

Other things that work against ODBMS seem to be the lack of interoperability with a great number of tools/features that are taken for granted in the SQL world including but not limited to industry standard connectivity, reporting tools, OLAP tools and backup and recovery standards. Additionally, object databases lack a formal mathematical foundation, unlike the relational model, and this in turn leads to weaknesses in their query support. However, this objection is offset by the fact that some Odom’s fully support SQL in addition to navigational access, e.g. Objectivity/SQL++, Matisse, and Intersystem CACHÉ. Effective use may require compromises to keep both paradigms in sync.

In fact there is an intrinsic tension between the notion of encapsulation, which hides data and makes it available only through a published set of interface methods, and the assumption underlying much database technology, which is that data should be accessible to queries based on data content rather than predefined access paths. Database-centric thinking tends to view the world through a declarative and attribute-driven viewpoint, while OOP tends to view the world through a behavioral viewpoint. This is one of the many impedance mismatch issues surrounding OOP and databases.

Although some commentators have written off object database technology as a failure, the essential arguments in its favor remain valid, and attempts to integrate database

Functionality more closely into object programming languages continue in both the research and the industrial communities

Investigate the prototyping model and any object-oriented model.

Discussion, see Object-oriented analysis and design and Object-oriented programming. The description of these Objects is a Schema.

As an example, in a model of a Payroll System, a Company is an Object. An Employee is another Object. Employment is a Relationship or Association. An Employee Class (or Object for simplicity) has Attributes like Name, Birthdates, etc. The Association itself may be considered as an Object, having Attributes, or Qualifiers like Position, etc. An Employee Method may be Promote, Raise, etc.

The Model description or Schema may grow in complexity to require a Notation. Many notations has been proposed, based on different paradigms, diverged, and converged in a more popular one known as UML.

An informal description or a Schema notation is translated by the programmer or a Computer-Aided Software Engineering tool in the case of Schema notation (created using a Module specific to the CASE tool application) into a specific programming language that supports Object-Oriented Programming (or a Class Type), a Declarative Language or into a Database schema.

Posted by mathy at 12:52 AM 0 comments

Saturday, December 13, 2008

Strengths and Weakness of SDLC Models

Waterfall Model

This is the most common and classic of life cycle models, also referred to as a linear-sequential life cycle model. It is very simple to understand and use. In a waterfall model, each phase must be completed in its entirety before the next phase can begin. At the end of each phase, a review takes place to determine if the project is on the right path and whether or not to continue or discard the project. Unlike what I mentioned in the general model, phases do not overlap in a waterfall model.

Strengths

· Simple and easy to use.

· Easy to manage due to the rigidity of the model – each phase has specific deliverables and a review process.

· Phases are processed and completed one at a time.

· Works well for smaller projects where requirements are very well understood.

Weaknesses

· Adjusting scope during the life cycle can kill a project

· No working software is produced until late during the life cycle.

· High amounts of risk and uncertainty.

· Poor model for complex and object-oriented projects.

· Poor model for long and ongoing projects.

V-Shaped Model

Strengths

· Simple and easy to use.

· Each phase has specific deliverables.

· Higher chance of success over the waterfall model due to the development of test plans early on during the life cycle.

· Works well for small projects where requirements are easily understood.

Weaknesses

· Very rigid, like the waterfall model.

· Little flexibility and adjusting scope is difficult and expensive.

· Software is developed during the implementation phase, so no early prototypes of the software are produced.

· Model doesn’t provide a clear path for problems found during testing phases

Incremental Model

Stengths

·Generates working software quickly and early during the software life cycle.

·More flexible – less costly to change scope and requirements.

·Easier to test and debug during a smaller iteration.

·Easier to manage risk because risky pieces are identified and handled during its iteration.

·Each iteration is an easily managed milestone.

Weaknesses

·Each phase of an iteration is rigid and do not overlap each other.

·Problems may arise pertaining to system architecture because not all requirements are gathered up front for the entire software life cycle.

Spiral Model

The spiral model is the most generic of the models. Most life cycle models can be derived as special cases of the spiral model. The spiral uses a risk management approach to software development. Some advantages of the spiral model are:

· defers elaboration of low risk software elements

· incorporates prototyping as a risk reduction strategy

· gives an early focus to reusable software

· accommodates life-cycle evolution, growth, and requirement changes

· incorporates software quality objectives into the product

· focus on early error detection and design flaws

· sets completion criteria for each project activity to answer the question: "How much is enough?"

· uses identical approaches for development and maintenance can be used for hardware-software system develop

Strengths

· High amount of risk analysis

· Good for large and mission-critical projects.

· Software is produced early in the software life cycle.

Weaknesses

Can be a costly model to use.

Risk analysis requires highly specific expertise.

Project’s success is highly dependent on the risk analysis phase.

Doesn’t work well for smaller projects.

Evolutionary Prototyping

Evolutionary prototyping uses multiple iterations of requirements gathering and analysis, design and prototype development. After each iteration, the result is analyzed by the customer. Their response creates the next level of requirements and defines the next iteration

Strengths

· Customers can see steady progress

· This is useful when requirements are changing rapidly, when the customer is reluctant to commit to a set of requirements, or when no one fully understands the application area

Weakness

· It is impossible to know at the outset of the project how long it will take.

· There is no way to know the number of iterations that will be required.

Evolutionary Prototyping Summary The manager must be vigilant to ensure it does not become an excuse to do code-and-fix development.

Code-and-Fix

If you don't use a methodology, it's likely you are doing code-and-fix. Code-and-fix rarely produces useful results. It is very dangerous as there is no way to assess progress, quality or risk.

Strengths

· No time spent on "overhead" like planning, documentation, quality assurance, standards enforcement or other non-coding activities

· Requires little experience

Weakness

· Dangerous.

· No means of assessing quality or identifying risks.

· Fundamental flaws in approach do not show up quickly, often requiring work to be thrown out.

Code-and-Fix Summary Code-and-fix is only appropriate for small throwaway projects like proof-of-concept, short-lived demos or throwaway prototypes

Weakness

· Won't be able to predict the full range of functionality.

Design-to-Schedule SummaryIn design-to-schedule delivery, it is critical to prioritize features and plan stages so that the early stages contain the highest-priority features. Leave the lower priority features for later

Staged Delivery

Although the early phases cover the deliverables of the pure waterfall, the design is broken into deliverables stages for detailed design, coding, testing and deployment.

Strengths

· Can put useful functionality into the hands of customers earlier than if the product were delivered at the end of the project.

Weakness

· Doesn't work well without careful planning at both management and technical levels

Staged Delivery Summary For staged delivery, management must ensure that stages are meaningful to the customer. The technical team must account for all dependencies between different components of the system

Evolutionary Delivery

Evolutionary delivery straddles evolutionary prototyping and staged delivery

Strengths

Enables customers to refine interface while the architectural structure is as

Weakness

Doesn't work well without careful planning at both management and

Evolutionary Delivery Summary For evolutionary delivery, the initial emphasis should be on the core components of the system. This should consist of lower level functions which are unlikely to be changed by customer feedback

Design-to-Schedule

Like staged delivery, design-to-schedule is a staged release model. However, the number of stages to be accomplished are not known at the outset of the project.

Strengths

· Produces date-driven functionality, ensuring there is a product at the critical date.

· Covers for highly suspect estimates

Posted by mathy at 12:34 AM 3 comments

Friday, December 12, 2008

Software Life Cycle Models

Software Development Life Cycle (SDLC) is a methodology that is typically used to develop, maintain and replace information systems for improving the quality of the software design and development process. The typical phases are analysis, estimation, design, development, integration and testing and implementation. The success of software largely depends on proper analysis, estimation, design and testing before the same is implemented. This article discusses SDLC in detail and provides guidance for building successful software.

Methods

Life cycle models describe the interrelationships between software development phases. The common life cycle models are:

spiral model

Incremental model

V-shaped model

waterfall model

General model

throwaway prototyping model

evolutionary prototyping model

code-and-fix model

reusable software model

automated software synthesis

Because the life cycle steps are described in very general terms, the models are adaptable and their implementation details will vary among different organizations. The spiral model is the most general. Most life cycle models can in fact be derived as special instances of the spiral model. Organizations may mix and match different life cycle models to develop a model more tailored to their products and capabilities.

The Spiral Model

The spiral model is a software development process combining elements of both design and prototyping-in-stages, in an effort to combine advantages of top-down and bottom-up concepts.The spiral model was defined by Barry Boehm in his article A Spiral Model of Software Development and Enhancement from 1985. This model was not the first model to discuss iterative development, but it was the first model to explain why the iteration matters. As originally envisioned, the iterations were typically

6 months to 2 years long.Each phase starts with a design goal and ends with the client (who may be internal) reviewing the progress thus far. Analysis and engineering efforts are applied at each phase of the project, with an eye toward the end goal of the project.

1. The new system requirements are defined in as much detail as possible. This usually involves interviewing a number of users representing all the external or internal users and other aspects of the existing system.

2.A preliminary design is created for the new system.

3.A first prototype of the new system is constructed from the preliminary design. This is usually a scaled-down system, and represents an approximation of the characteristics of the final product.

4. A second prototype is evolved by a fourfold procedure:

1. evaluating the first prototype in terms of its strengths, weaknesses, and risks;

2. defining the requirements of the second prototype;

3. planning and designing the second prototype;

4. constructing and testing the second prototype.

5. At the customer's option, the entire project can be aborted if the risk is deemed too great. Risk factors might involve development cost overruns, operating-cost miscalculation, or any other factor that could, in the customer's judgment, result in a less-than-satisfactory final product.

6. The existing prototype is evaluated in the same manner as was the previous prototype, and, if necessary, another prototype is developed from it according to the fourfold procedure outlined above.

7.The preceding steps are iterated until the customer is satisfied that the refined prototype represents the final product desired.

8. The final system is constructed, based on the refined prototype.

Incremental Model

The incremental model is an intuitive approach to the waterfall model. Multiple development cycles take place here, making the life cycle a “multi-waterfall” cycle. Cycles are divided up into smaller, more easily managed iterations. Each iteration passes through the requirements, design, implementation and testing phases.

A working version of software is produced during the first iteration, so you have working software early on during the software life cycle. Subsequent iterations build on the initial software produced during the first iteration.

Just like the waterfall model, the V-Shaped life cycle is a sequential path of execution of processes. Each phase must be completed before the next phase begins. Testing is emphasized in this model more so than the waterfall model though. The testing procedures are developed early in the life cycle before any coding is done, during each of the phases preceding implementation.

The high-level design phase focuses on system architecture and design. An integration test plan is created in this phase as well in order to test the pieces of the software systems ability to work together.

The implementation phase is, again, where all coding takes place. Once coding is complete, the path of execution continues up the right side of the V where the test plans developed earlier are now put to use.

Waterfall Model

Waterfall Model

Software life cycle models describe phases of the software cycle and the order in which those phases are executed. There are tons of models, and many companies adopt their own, but all have very similar patterns. The general, basic model is shown below:

General Life Cycle

Model

REQUIRMENT DESIGN IMPLEMENTATION TESTING

Each phase produces deliverables required by the next phase in the life cycle. Requirements are translated into design. Code is produced during implementation that is driven by the design. Testing verifies the deliverable of the implementation phase against requirements.

Business requirements are gathered in this phase. This phase is the main focus of the project managers and stake holders. Meetings with managers, stake holders and users are held in order to determine the requirements. Who is going to use the system? How will they use the system? What data should be input into the system? What data should be output by the system? These are general questions that get answered during a requirements gathering phase. This produces a nice big list of functionality that the system should provide, which describes functions the system should perform, business logic that processes data, what data is stored and used by the system, and how the user interface should work. The overall result is the system as a whole and how it performs, not how it is actually going to do it.

The software system design is produced from the results of the requirements phase. Architects have the ball in their court during this phase and this is the phase in which their focus lies. This is where the details on how the system will work is produced. Architecture, including hardware and software, communication, software design (UML is produced here) are all part of the deliverables of a design phase.

Code is produced from the deliverables of the design phase during implementation, and this is the longest phase of the software development life cycle. For a developer, this is the main focus of the life cycle because this is where the code is produced. Implementation my overlap with both the design and testing phases. Many tools exists (CASE tools) to actually automate the production of code using information gathered and produced during the design phase.

During testing, the implementation is tested against the requirements to make sure that the product is actually solving the needs addressed and gathered during the requirements phase. Unit tests and system/acceptance tests are done during this phase. Unit tests act on a specific component of the system, while system tests act on the system as a whole.

So in a nutshell, that is a very basic overview of the general software development life cycle model. Now lets delve into some of the traditional and widely used variations.

Throwaway Prototyping Model

Useful in " proof of concept" or situations where requirements and user's needs are unclear or poorly specified. The approach is to construct a quick and dirty partial implementation of the system during or before the requirements phase.

Use in projects that have low risk in such areas as losing budget, schedule predictability and control, large-system integration problems, or coping with information sclerosis, but high risk in user interface design.

Code-and-Fix

If you don't use a methodology, it's likely you are doing code-and-fix. Code-and-fix rarely produces useful results. It is very dangerous as there is no way to assess progress, quality or risk.

Code-and-fix is only appropriate for small throwaway projects like proof-of-concept, short-lived demos or throwaway prototypes.

Posted by mathy at 5:07 AM 1 comments

Thursday, December 11, 2008

NETWORK TOPOLOGIES

Network topologies are categorized into the following basic types:

bus

ring

star

tree

mesh

BUS

More complex networks can be built as hybrids of two or more of the above basic topologies.

Bus consists of a single linear cable called a trunk.

Data is sent to all computers on the trunk. Each computer examines EVERY packet on the wire to determine who the packet is for and accepts only messages addressed to them.

Bus is a passive topology.

Performance degrades as more computers are added to the bus.

Signal bounce is eliminated by a terminator at each end of the bus.

Barrel connectors can be used to lengthen cable.

Usually uses Thin net or Thicket

both of these require 50 ohm terminator

good for a temporary, small (fewer than 10 people) network

But it’s difficult to isolate malfunctions and if the backbone goes down, the entire network goes down.

Repeaters can be used to regenerate signals.

Ethernet bus topologies are relatively easy to install and don't require much cabling compared to the alternatives. 10Base-2 ("ThinNet") and 10Base-5 ("ThickNet") both were popular Ethernet cabling options years ago. However, bus networks work best with a limited number of devices. If more than a few dozen computers are added to a bus, performance problems will likely result. In addition, if the backbone cable fails, the entire network effectively becomes unusable.

To implement a ring network, one typically uses FDDI, Sonet , or Token Ring tech Ring Topology

usually seen in a Token Ring or FDDI (fiber optic) network

Each computer acts as a repeater and keeps the signal strong => no need for repeaters on a ring topology

No termination required => because its a ring

Token passing is used in Token Ring networks. The token is passed from one computer to the next, only the computer with the token can transmit. The receiving computer strips the data from the token and sends the token back to the sending computer with an acknowledgment. After verification, the token is regenerated.

relatively easy to install, requiring ;minimal hardware

Compared to the bus topology, a star network generally requires more cable, but a failure in any star network cable will only take down one computer's network access and not the entire LAN. (If the hub fails, however, the entire network also fails.)

Computers are connected by cable segments to a centralized hub.

Requires more cable.

If hub goes down, entire network goes down.

If a computer goes down, the network functions normally.

most scalable and reconfigurable of all topologies

The mesh topology connects each computer on the network to the others

Meshes use a significantly larger amount of network cabling than do the other network topologies, which makes it more expensive.

The mesh topology is highly fault tolerant.

Every computer has multiple possible connection paths to the other com-putters on the network, so a single cable break will not stop network communications between any two computers.

Several star topologies linked with a linear bus.

No single computer can take the whole network down. If a single hub fails, only the computers and hubs connected to that hub are affected.

Star Ring Topology

Also known as star wired ring because the hub itself is wired as a ring. This means it's a physical star, but a logical ring.

This topology is popular for Token Ring networks because it is easier to implement than a physical ring, but it still provides the token passing capabilities of a physical ring inside the hub.

Just like in the ring topology, computers are given equal access to the network media

The passing of the token.

A single computer failure cannot stop the entire network, but if the hub fails, the ring that the hub controls also fails.

Hybrid Mesh

most important aspect is that a mesh is fault tolerant

a true mesh is expensive because of all the wire needed

Another option is to mesh only the servers that contain information that everyone has to get to. This way the servers (not all the workstations) have fault tolerance at the cabling level.

Posted by mathy at 2:44 AM 1 comments

Tuesday, December 9, 2008

Project Management

Project management is a carefully planned and organized effort to accomplish a specific (and usually) one-time effort, for example, construct a building or implement a new computer system. Project management includes developing a project plan, which includes defining project goals and objectives, specifying tasks or how goals will be achieved, what resources are need, and associating budgets and timelines for completion. It also includes implementing the project plan, along with careful controls to stay on the "critical path", that is, to ensure the plan is being managed according to plan. Project management usually follows major phases (with various titles for these phases), including feasibility study, project planning, implementation, evaluation and support/maintenance. (Program planning is usually of a broader scope than project planning, but not always.)

Project management, is the application of knowledge, skills, tools, and techniques to describe, organize, oversee and control the various project processes.

Project Management is the ongoing process of directing and coordinating all the steps in the development of an information system. Effective project management is necessary throughout the entire systems development and implementation. The need for effective project management is most often recognized during system development.

The goal of project management is to produce an information system that is acceptable to its users and that is developed within the specified time frame and budget. The acceptability, deadline and budget criteria must all be met for a project to be considered completely successful. Failing to meet any one of these three criteria usually indicates a failure, at least in part, in project management.

According to the Project Management Institute the components of project management are organized into nine Project Management Knowledge Areas.

But perhaps project management can best be described in terms of the things that you need to do to successfully manage a project:

Project Integration Management:

Develop and manage a project plan. The processes required to ensure that elements of the project are properly coordinated. It consists of project plan development, project plan execution, and overall change control.

Project Scope Management:

Plan, define and manage project scope. The processes required to ensure that all the work required, and only the work required, is included to complete the project successfully. It consists of initiation, scope planning, scope definition, scope verification and scope change control.

Project Time Management:

Create a project schedule. The processes required to ensure the project is completed in a timely way. It consists of activity definition, activity sequencing, activity duration estimating, schedule development, and schedule control.

Project Cost Management:

Create plan resources and budget costs. The processes required to ensure the project is completed within he approved budget. It consists of resource planning, cost estimating, cost budgeting, and cost control.

Project Quality Management:

Develop a quality plan and carry out quality assurance and quality control activities. The processes required to ensure that the project satisfies the needs for which it was undertaken. It consists of quality planning, quality assurance, and quality control.

Project Human Resource Management:

Perform organizational planning, manage staff acquisitions and promote team development. The processes required to most effectively use the people involved in the project. It consists of organizational planning, staff acquisition, and team development.

Project Communications Management:

Develop a communications plan. The processes required to timely and appropriately generate, collect, disseminate, store, and ultimately dispose of project information. It consists of communications planning, information distribution, performance reporting, and administrative closure.

Project Risk Management:

Identify risks, prepare risk mitigation plans and execute contingency actions. The processes concerned with identifying, analyzing, and responding to project risk. It consists of risk identification, risk quantification, risk response development, and risk response control.

Project Procurement Management:

The processes required to acquire goods and services from outside the organization. It consists of procurement planning, solicitation planning, solicitation, source selection, contract administration, and contract closeout.

Activities of Project Manager:

A project manager is a facilitator. The ideal project manager does whatever it takes to ensure that the members of the software project team can do their work. This means working with management to ensure they provide the resources and support required as well as dealing with team issues that are negatively impacting a team's productivity. The project manager must possess a combination of skills including the ability to ask penetrating questions, identify unstated assumptions, and resolve personnel conflicts along with more systematic management skills.

The actions of a project manager should be almost unnoticeable and when a project is moving along smoothly people are sometimes tempted to question the need for a project manager. However, when you take the skilled project manager out of the mix, the project is much more likely to miss deadlines and exceed budgets.

The project manager is the one who is responsible for making decisions in such a way that risk is controlled and uncertainty minimized. Every decision made by the project manager should ideally be directly benefit the project.

On small projects, the project manager will likely deal directly with all members of the software development team. On larger projects, there is often a lead developer, lead graphic designer, lead analyst, etc. that report directly to the project manager.

Essential qualities of project manager:

a) Strong leadership ability

Leadership is getting things done through others; the project manager achieves the results through project team. He needs to inspire the project team members, needs to create a vision of the result. Project leadership requires a participative and conclusive leadership style, in which the project manager provides guidance and coaching to the project team. It also requires involvement and empowerment of the project team. He should motivate the team to achieve its objective.

b) Ability to develop people

The effective project manager has a commitment to the training and development of people working on the project. The project manager should establish an environment where people can learn from the tasks they perform and situations they experience. He should provide opportunities for learning and development by encouraging the individuals. A final way in which a project manager can develop people is by having them attend formal training sessions.

c)Excellent communication skills

Project managers must be good communicators. It’s important for the project manager to provide timely feedback to the team and customer. The project manager needs to communicate regularly with the project team, as well as with any subcontractor, the customer and their own company’s upper management. Effective and frequent communication is critical for keeping the project moving, identifying potential problems, and soliciting suggestions to improve project performance, keeping customer satisfaction and avoiding surprises.

d) Ability to handle stress

Project managers need to handle the stress that can arise from work situations. Stress is likely to be high when some critical problems arise. The project managers can improve their ability to handle stress by keeping physically fit through regular exercise and good nutrition, he can also organize stress relief activities playing outdoor games etc.

e)Good interpersonal skills

Good interpersonal depends on how good his oral and written communication. The project manager needs to establish clear expectations of the members of the project team so that everyone knows the importance their roles in the project. A project manager needs good interpersonal skills to try to influence the thinking and action of others, to deal with disagreement or divisiveness among the team members.

f)Problem-solving skills

A project manager should encourage project team to identify problems early and solve them. Team members should be asked to give suggestions to solve the problem. The project manager should then use analytical skills to evaluate the information and develop the optimal solution.

Developing skills needed to be a project manager:

Gain experience.

Seek out feed back from others.

Conduct a self-evaluation, and learn from your mistakes.

Interview project managers who have the skills you want to develop in yourself.

Participate in training programs, volunteer yourself in other work.

Posted by mathy at 12:34 AM 0 comments

Monday, December 8, 2008

Windows Explorer keyboard shortcuts

END - Display the bottom of the active window.

HOME - Display the top of the active window.

NUM LOCK+ASTERISK on numeric keypad (*) - Display all subfolders under the selected folder.

NUM LOCK+PLUS SIGN on numeric keypad (+) - Display the contents of the selected folder.

NUM LOCK+MINUS SIGN on numeric keypad (‐) - Collapse the selected folder

LEFT ARROW - Collapse current selection if it's expanded, or select parent

folder.

RIGHT ARROWn- Display current selection if it's collapsed, or select first subfolder

Posted by mathy at 4:15 AM 0 comments

Sunday, December 7, 2008

System Analysis Design

Analysis

The third phase of the SDLC in which the current system is studied and alternative replacement system is proposed. Description of current system and where problems or opportunities are with a general recommendation on how to fix. Enhance, or replace current system, explanation of alternative systems and justification for chosen alternative

Analysis Phase – One phase of the SDLC whose objective is to understand the user’s needs and develop requirements

Gather information

Define system Requirements

Build Prototypes for Discovery of Requirements

Prioritize Requirements

Generate and Evaluate Alternative

Review recommendations with Management

Requirement Analysis

Based on the survey have been conducted and studied several analysis had been performed on some existing solutions that are currently used. The following of the requirements will be incorporate into this “Modern Acrobatic Centre” in order to make it successfully adopted by users.

a. Easy to use.

The system will not incorporate complicated functions to make it difficult to use. The user interface will be properly designed, so that the user can understand it clearly.

b.Easy to maintain.

The system will be easy developed with easing technology so that it is easy to maintain and does not depend on proprietary technology.

c.Easy to support.

By developing the system on Visual Basic environment, it will make easy to support. By ensuring the user interface is properly clear and good help modules is designed for the system, we will ensure that minimal support required for the system.

d.Support multi levels of security control to access the different parts of the system.

e.System must be able to update frequently.

System must be able for future enhancement

Design